GPT-5 Promises Another AI Revolution. Is It Real Progress or Just Vibe Graphing?

A recent dual release from OpenAI has marked a pivotal moment for developers. In the span of a single week, we saw the arrival of two distinct and powerful model families: the highly anticipated proprietary, GPT-5, and an open-weight alternative, gpt-oss.

This guide will break down what these releases mean for you, how the models differ, and most importantly, how you can leverage both of them today using DocsGPT. 👨💻

A Deep Dive into GPT-5: The New Proprietary Flagship

GPT-5 is positioned as OpenAI's most advanced model, promising a significant leap in reasoning and performance. Let's look past the launch hype to see what's under the hood.

The Core Architecture: A Smart Router System

The most significant change in the user-facing product is the move to a unified, routed system. Instead of manually choosing between different model versions (like GPT-4o or GPT-4-mini), GPT-5 uses an intelligent router that analyzes your prompt in real-time.

- Simple tasks are sent to a fast, efficient base model for quick answers.

- Complex problems requiring deep logic are automatically routed to the more powerful "GPT-5 Thinking" model.

Key Capabilities and Benchmarks

OpenAI's launch highlighted impressive gains in several key areas:

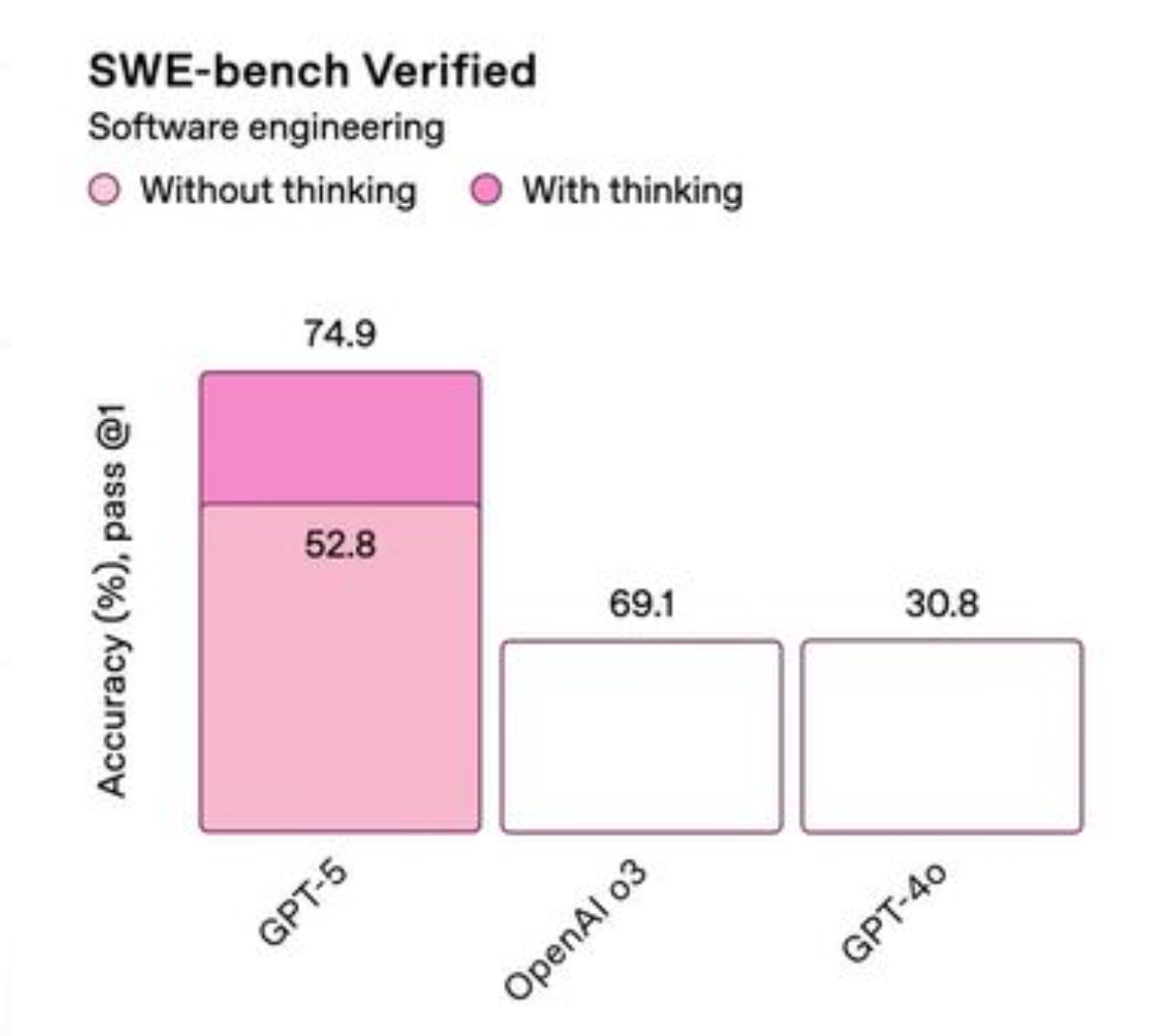

- Advanced Coding & Design: The community has dubbed one of its standout features "vibe coding"—the ability to generate complex, well-designed applications from simple, natural language prompts. GPT-5 shows a much-improved grasp of UI/UX principles like layout and typography, producing code that is not just functional but also aesthetically pleasing. It has set new state-of-the-art scores on coding benchmarks like SWE-bench (74.9%) and Aider Polyglot (88%).

- "PhD-Level" Expertise: The model shows remarkable improvement in complex domains. It achieved a perfect score (100%) on the AIME 2025 math competition benchmark and 89.4% on PhD-level science questions, showcasing a new level of abstract reasoning. 🧠

- New API Controls: Developers get more granular control with new parameters like

reasoning_effortandverbosity. The API also now supports a 400K token context window and, in a major quality-of-life update, allows for plain text tool calls, eliminating the need to escape JSON.

Early Community Feedback and Real-World Performance

Despite the impressive benchmarks, the initial reception from developers has been mixed. The feedback highlights a growing gap between benchmark scores and the practical developer experience.

- Performance on Existing Code: Many users reported that while the model excels at generating new projects from scratch, it struggles with tasks involving existing, complex codebases. Slowness and "borderline unusable" latency for iterative coding were common complaints.

- Overly Aggressive Safety Filters: Developers expressed frustration with safety guardrails that blocked legitimate use cases, such as one user being unable to generate an app with iPhone images due to perceived copyright issues.

- Tiered Service Concerns: Paying subscribers voiced concerns that the retirement of older models and restrictive message limits on the new "GPT-5 Thinking" mode effectively degraded their paid service. This perception of being pushed toward more expensive tiers to maintain previous levels of utility was a significant point of friction.

The "Vibegraphing" Incident: A Lesson in Verification

Nothing captured the juxtaposition of superhuman AI and human fallibility better than the presentation's chart errors. In a now-famous slide, the bar representing a 52.8% score was visibly taller than the one for 69.1%.

The community quickly coined the term "vibegraphing" - presenting data based on feeling rather than accuracy. While clearly an honest mistake from the OpenAI team, it served as a humorous but powerful reminder for all developers: always verify the output. If the launch presentation for the world's most advanced AI can have simple errors, it underscores the critical need to keep a human in the loop.

The Open-Weight Alternative: gpt-oss

For many developers, the biggest news was the surprise release of gpt-oss-120b and gpt-oss-20b - OpenAI's first open-weight models in over five years.

Open-Weight vs. Open-Source

It's important to clarify the term "open-weight." OpenAI has released the trained model parameters (the weights), but not the training code or the dataset. However, these models are released under the highly permissive Apache 2.0 license, which allows for commercial use, modification, and redistribution. This is a green light for developers and businesses to build on top of these models freely.

Meet the Models

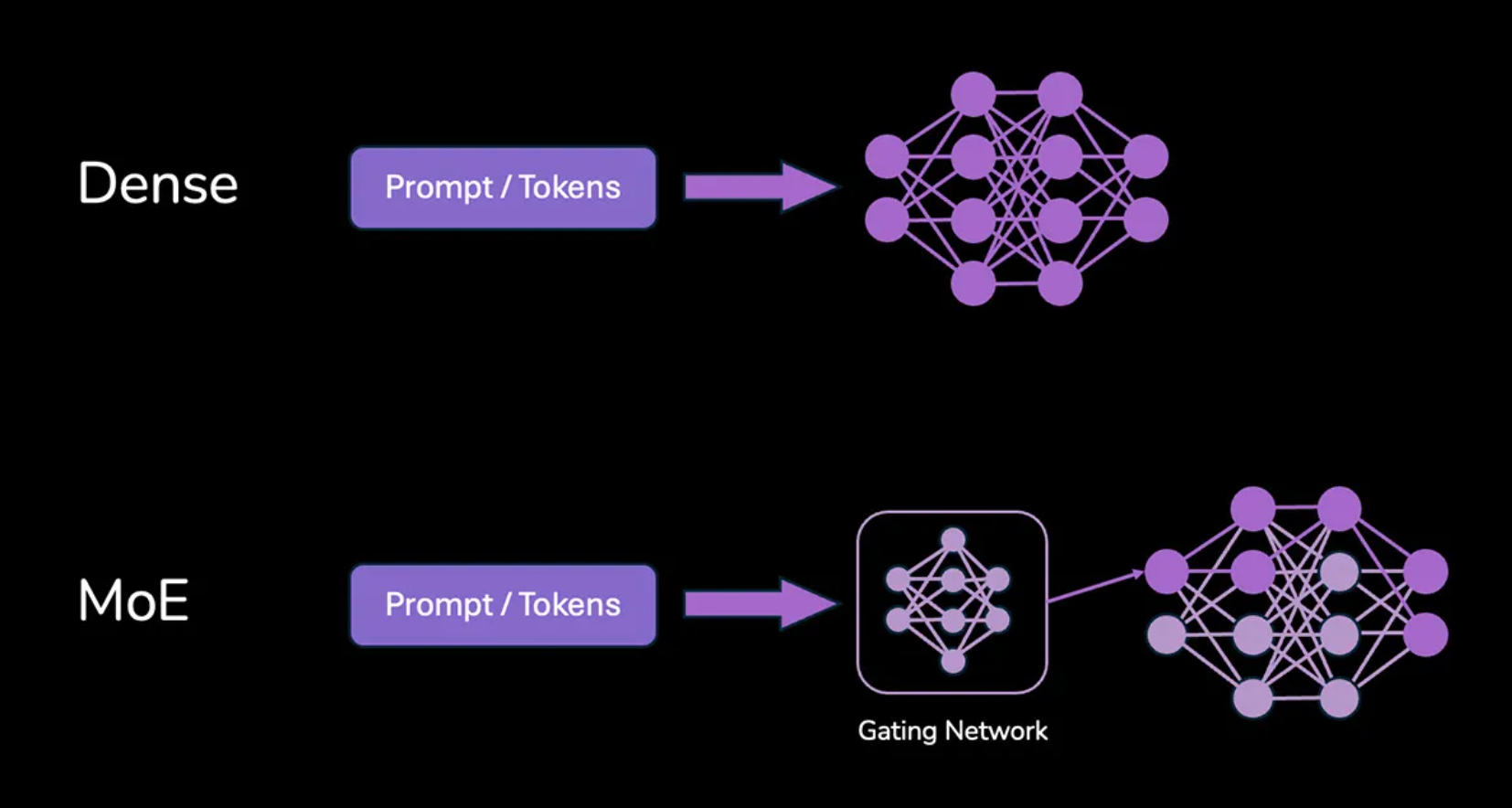

The family uses a Mixture-of-Experts (MoE) architecture, which makes them highly efficient by only activating a fraction of the total parameters for any given task.

gpt-oss-120b: A 117-billion parameter model designed for production-grade, high-reasoning tasks. It is claimed to have near-parity witho4-miniand can run on a single 80GB datacenter GPU like an NVIDIA H100.gpt-oss-20b: A 21-billion parameter model designed to run on consumer hardware, including high-end laptops (like Macs), desktops with consumer GPUs (16GB+ VRAM), and even some mobile devices. 💻

Building on OpenAI's historical strength in tool use, both models feature native support for reliable function calling and structured outputs. This makes them exceptionally well-suited for building complex automations and powering reliable agentic workflows right out of the box. 🤖

GPT-5 on DocsGPT Cloud 🚀

We are thrilled to announce that GPT-5 is now available on the DocsGPT cloud platform! You can apply its powerful reasoning to your private knowledge bases with a single click.

Log in to your DocsGPT cloud account, select GPT-5 from the model dropdown, and experience the next generation of AI-powered knowledge retrieval.

Ultimate Privacy: Run gpt-oss Locally

For teams or individuals needing absolute data sovereignty, you can run the powerful gpt-oss models locally with DocsGPT for a 100% private solution. We've streamlined the local setup using Docker and Ollama.

Here's a quick overview:

- Clone the DocsGPT Repository

- Run setup.sh or setup.ps1 depending on your system

- Select

Serve Localoption - Choose between

gpt-oss:20borgpt-oss:120b

That's it! You are now running a fully private setup on your own machine. For complete instructions, please refer to our Documentation.

The Future is Hybrid, and You're in Control🏄

With DocsGPT, you don't have to choose. Our platform acts as the bridge, letting you harness the best of both worlds - applying the cutting-edge intelligence or the uncompromised privacy.