Local AI Revolution: Deepseek-R1, Qwen2.5-1M and how to run them on your machine

Move over, cloud giants - the open source AI rebellion is here! 2025 is shaping up to be the year your grandma's laptop could outsmart Silicon Valley's server farms. Let's dive into the juicy details.

🤯 Deepseek just dropped the mic (and the Price Tag)

China's Deepseek presented a real monster with total training costs of Under $10M.

- Deepseek-R1 now beats o1 in math and coding benchmarks

- Full open-source release including their secret sauce training algorithm

- Comes with some "interesting" guardrails

Meanwhile, Qwen(1 million) and MiniMax(4 million) presented models with record context length inputs:

context = "The entire Lord of The Rings trilogy" * 2 # 1M token support 🤯

🎨 The open source artists toolkit

Janus-Pro-7B is the Banksy of AI image gen:

- Matches DALL-E 3 quality with 7B parameters

- Perfect for generating:

- Crypto ape NFTs 🦍

- Questionable meme templates

- "Totally original" stock photos

Hunyuan3D-2 is the 3D asset option:

- Generates 3 high-res meshes + textures

- Perfect for:

- Indie game devs tired of $1000/month Unity assets

- Metaverse enthusiasts building their 17th virtual nightclub

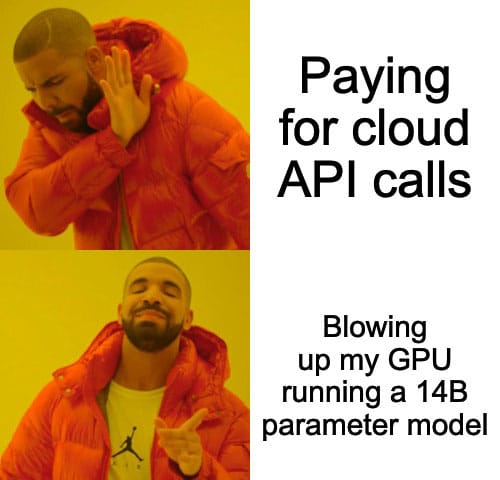

🔥 How to join the Local AI party (No cloud hangover)

Want to run this without Big Tech babysitting your data? Here's the secret sauce to be safe from providers database hack:

Step 1: Choose Your Drink 🍸

# For Mac/Linux warriors

curl -fsSL https://ollama.com/install.sh | sh

# Windows gang (yes, we see you)

winget install ollama

ollama run deepseek-r1:7b-chat # Lightweight champ

ollama run deepseek-r1:34b # When you need that Hulk smash

Step 2: Call a friendly bartender 🦖

git clone https://github.com/arc53/DocsGPT.git

cd DocsGPT

# Configure your .env like a pro hacker:

echo -e "API_KEY=xxxx\nLLM_NAME=openai\nMODEL_NAME=deepseek-r1:1.5b\nVITE_API_STREAMING=true\nOPENAI_BASE_URL=http://host.docker.internal:11434/v1\nEMBEDDINGS_NAME=huggingface_sentence-transformers/all-mpnet-base-v2" > .env

#Don't warry LLM_NAME=openai only means that we will be using this API format

docker compose build # Magic happening here

docker compose up

Now your personal AI assistant is waiting for you at http://localhost:5173/

🚨 Warning: Side effects may include...

- Developing separation anxiety from your model weights

- Your GPU sounding like a jet engine

- Mistaking real conversations for chatbot interactions

Enterprises: If you need a truly private AI solution for your data, we've got you covered too (but where's the fun in that?).

Local AI: Because sometimes you want your questionable content generations to stay between you and your overheating GPU. 🔥